How to upload DE user-generated data to DE user community

Users have the possibility to request a self-generated dataset to be promoted as a DestinE dataset.

For that, users open a ticket on DestinE Help Desk, in which they describe their dataset and why should it be promoted.

The procedure the user has to follow is described in Promote user data to become DestinE data

The upload of the user dataset to the data lake is conditioned by the approval of a board.

Once approved, DEDL operators can upload user data by following the procedure as follows:

Preparation for upload

Before proceeding to the upload, user generated dataset must comply to the following rules:

The dataset must follow the following organization:

MY_DATASET_ID/

metadata/

collection.json

items/

ITEM_1_ID.json

ITEM_2_ID.json

...

data/

...

Ideally, the data folder should be organized by date: ./year/month/day

The dataset is provided as a STAC collection. Apart from the data itself, it must also contain

the metadata describing the collection and

the items

in a format compliant with STAC spec.

Use STAC validator tool to ensure the provided metadata are compliant with STAC standard.

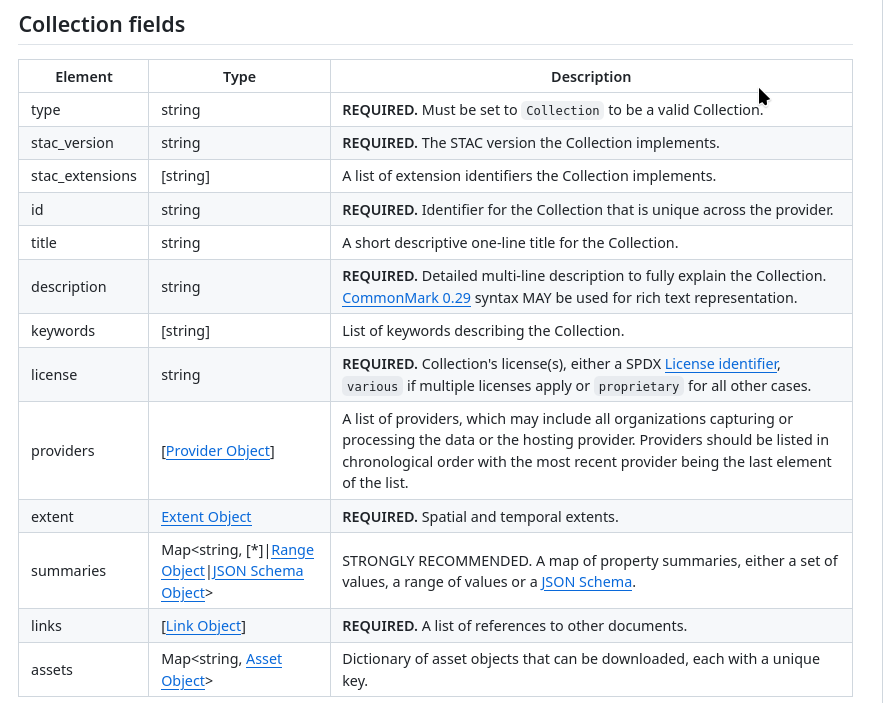

The collection metadata must include mandatory fields from the STAC collection spec:

In addition, the collection metadata must include keywords. Those shall be aligned with the existing HDA datasets keywords. An additional User generated keyword shall be added.

The providers section must contain

the processor,

producer and

licensor provider.

The host provider shall be DEDL.

Step 1 Notify the IAM service if new IAM roles are required for the dataset

If the dataset will be available only for specific users and a new set of IAM roles is necessary to describe this user group, contact the IAM service to create this new role.

Verify that the new role is available in the user JWT access token.

Step 2 Upload data to the data lake

Once the dataset is verified and validated, we can upload it to the data lake.

Upload the content of the data folder on DEDL Internal Fresh Data Pool (FDP) S3 bucket. S3 tools can be used with tools such as s3cmd from command line or boto3 for access from Python code.

Insert the collection.json metadata file into the DEDL Internal FDP database with pyPgSTAC.

pypgstac load collections ./metadata/collection.json

Insert items metadata into the DEDL Internal FDP database with pyPgSTAC:

find ./metadata/items -maxdepth 1 -type f -exec pypgstac load items {} \;

Configure HDA permissions for the newly added dataset

Add a new entry for the dataset in the HDA product types configuration file.

Configure the appropriate IAM roles in the scopes section of the product type configuration.

Step 3 Inform the user of the successful operation

Once the dataset is uploaded, test that you can discover its items and download data without any issue. Then, notify the user through their helpdesk ticket that their dataset is fully uploaded and available from the HDA API.